A Multivariate Approach to Dilepton Analyses in the Upgraded ALICE Detector at CERN-LHC (Master thesis)

I wrote my Master's thesis after conducting research in the ALICE collaboration for about a year.

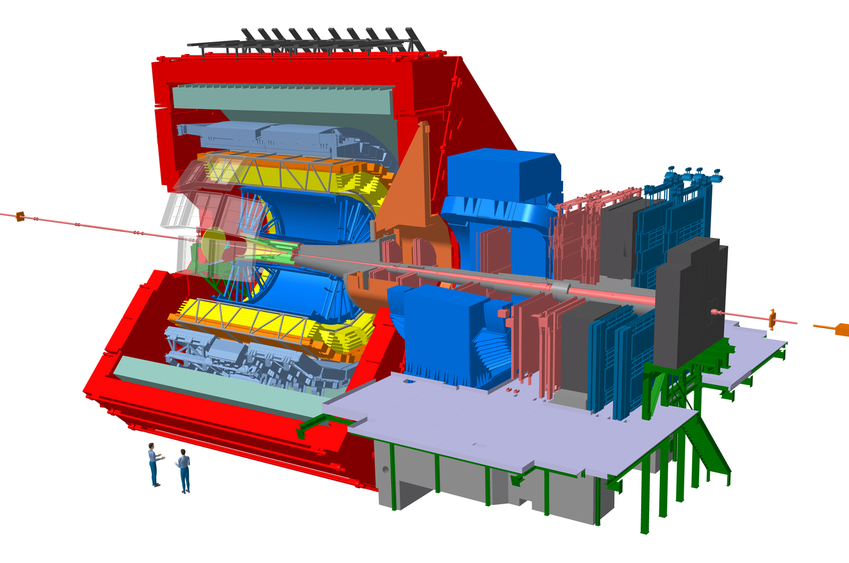

My Master's thesis focused on improving how one traditionally analyzed data from the ALICE experiment at CERN. At ALICE, lead atoms are accelerated to nearly the speed of light and then smashed together. These collisions create a tiny, incredibly hot and dense fireball of matter—a "mini Big Bang"—that mimics the conditions of the universe just microseconds after it was born.

The challenge is to understand what's happening inside that fireball. Most particles get trapped or altered, but certain pairs of electrons and positrons (their antimatter twins) fly out untouched. These pairs act as clean "messengers," carrying pristine information about the fireball's interior. The problem is that for every one messenger pair we want to study (the "signal"), the collision creates millions of other random particles that look similar (the "background"). It's a classic "needle in a haystack" problem.

Traditionally, scientists find this signal by applying a rigid checklist of filters, but this approach can be inefficient and throw away a lot of the valuable signal along with the background. My work tested a much "smarter" method: using a type of artificial intelligence called a deep neural network. Instead of a simple checklist, the AI learns to look at many different particle characteristics simultaneously to distinguish the real messengers from the noise.

The results were a significant leap forward. The AI was far more effective, improving our ability to isolate the signal from the background by up to 60%. This makes the entire analysis more powerful and efficient, ultimately allowing physicists to get a much clearer picture of the early universe from the collision data (with the added benefit of a much leaner analysis pipeline).

Read my Master's thesis to find out all the details.

Key facts

- Project: Master's thesis (TU Wien, Austria)

- Collaborations: ALICE (CERN, Geneva, Switzerland)

- Institute: Stefan Meyer Institute for Subatomic Physics (SMI, Vienna, Austria; now the Marietta Blau Institute (MBI))

- Date: March 2018

- Time invested: 14 months

- Links:

- Keywords: high-energy particle physics, heavy-ion collisions, quark-gluon plasma, data analysis, machine learning, deep learning, artificial intelligence

Abstract

ALICE, the dedicated heavy-ion experiment at CERN–LHC, will undergo a major upgrade in 2019/20. This work aims to assess the feasibility of conventional and multivariate analysis techniques for low-mass dielectron measurements in Pb-Pb collisions in a scenario involving the upgraded ALICE detector with a low magnetic field (B = 0.2 T). These electron-positron pairs are promising probes for the hot and dense medium, which is created in collisions of ultra-relativistic heavy nuclei, as they traverse the medium without significant final-state modifications. Due to their small signal-to-background ratio, high-purity dielectron samples are required. They can be provided by conventional analysis methods, which are based on sequential cuts, however at the price of low signal efficiency. This work shows that existing methods can be improved by employing multivariate approaches to reject different background sources of the dielectron invariant mass spectrum. The major background components are dielectrons from photon conversion and combinatorial pairs. By implementing deep neural networks, the signal-to-background ratio can be improved by up to 60% over existing results in the case of pure conversion rejection and up to 30% in the case of additional suppression of all combinatorial background components. In both cases, the gain in significance is about 15% compared to conventional approaches. Additionally, different strategies for rejecting heavy flavor pairs (i.e., dielectrons originating from cc or bb) are studied and some of their major challenges identified. In general, it is concluded that multivariate techniques are a powerful and promising approach to dielectron analyses since they significantly improve the results over conventional methods in terms of signal-to-background ratio and significance. Moreover, these techniques remove complexity from existing implementations as they allow to (1) base the analyses on individual tracks (instead of track pairs), essentially without sacrificing analysis performance, (2) render some of the existing and involved analysis methods obsolete and, to some degree, (3) obviate the need for manual input feature engineering.